I’m concerned about inherent bias. There was a Star Trek episode about this.

Yes, but Data is not even an expansion of anything we are currently calling AI. I recall a rather long video about the first chess robot that after reviewing however many thousands of games noticed that giving away the queen led to an immediate win in a very high percentage of the game and so had a tendency to give away the queen without the closing strategy to back it up.

Hehe, ‘life’ is a learning experience. The only thing I have seen that has evolved quicker than AI is my children - maybe.

@kelley1 - I saw that episode when it aired the first time. Every episode. “It’s time for Star Trek!” every week. They were all (almost) very progressive for their time in terms of their social messages. A lasting tribute to Gene Roddenberry.

I fully agree about the point of that story. It is extremely impressive and universal. If sometime in the future we get the technology to create a mind vs an amazing memory sink then the story will have even more relevance, (though the Dune world has the other side of that argument). Meanwhile, as the programming is linear and digital, there is no way currently to be like an analog orchestra of a billion parts, with each part contributing its own song always permanently “on” reacting to all other songs but no single path as is the case with a computer.

One’s “mind” is that combination of “always on” parts that are not only “on” per message and not permanently. Each neuron is not posting a single pulse but an infinitely variable pattern that varies based on what else is happening, and even the transmission point between neurons varies what is passed based on what has gone before.

We think in metaphors, specifically, but the metaphor is never exactly the same thing. So we think of a mind in terms of a computer and vice versa, just as it was thought of as complex clockwork before computers. A computer may be closer in some ways than a clockwork, but still not very close.

It is not by accident that the first computer concept was a complex clockwork.

The human mind is a complex orchestra of thoughts and emotions, creating a symphony of experiences. It is a source of wonder, with each thought and feeling a unique instrument contributing to the tapestry of our lives. Nurturing and cultivating the mind allows us to discover all the amazing things it is capable of.

… said ChatGPT. (the previous paragraph was made by ChatGPT)

I’m not arguing that chatgpt is sentient but I would say that your position is slightly overconfident in regards to the human experience being untouchable by systems like these.

It took 63 years to go from the first flight to the moon landing. Perhaps more applicable is that the average pc had about 32mb of ram in the year 2000, and now it’s more like 16gb — 500,000 times more in just 23 years. Who knows where we’ll be in about 5 or 10 years.

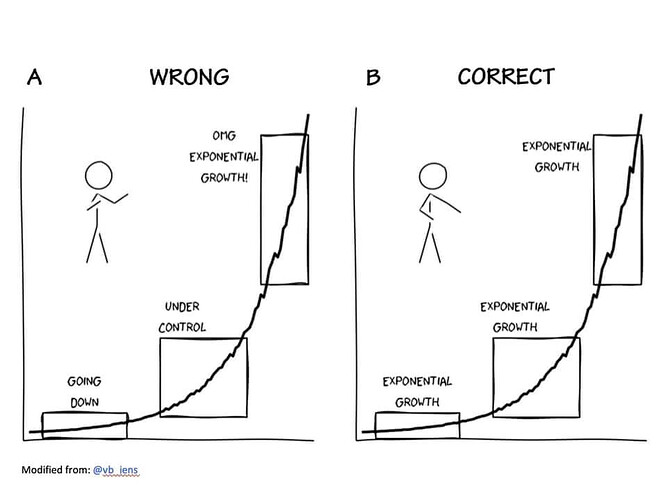

Beyond that, we’re on a curve and humans are really bad at recognizing those kinds of trends. We think things will keep on as they are now, which is a naive position when it comes to tech these days. (Or money or climate or any of a number of systems)

There’s a great (long) piece on some of these concepts if anyone’s interested about a somewhat alarming vision about where AI could be headed:

We’re in for an interesting next decade.

The problem is not one of size but of kind. To be aware everything has to be “on” and with a computer any particular bit is only “on” when the data is accessed and “off” the rest of the time. Each bit has only two answers, either yes or no, while each brain cell is in infinite permanent conversation with many connections that no two say the same thing in response to a signal or even the same thing over time, or even the same connection over time breaking connections where silent and increasing them on frequent stimulation.

And while the signal itself is the back-and-forth flow of atoms across a barrier that is “digital” it is not the individual points but the pattern of the points that is significant as each cell can notice time and patterns where the computer data cannot.

It is possible that one could “in theory” build such a machine and Star Trek’s Data is such a “machine” (although a writer’s concept and the eventual reality might be very different) but we are nowhere near there yet in spite of some stunning results from what we are doing.

Ever since I got my head around this, I’ve resigned myself to just observing without predicting.

Positive feedback, negative feedback, and tipping points. These all apply to any line where time is the X-axis. AI, climate, civil unrest…

I’ve thought for some time now that strong AI (human-like) won’t depend so much on MIPS as learning how to “seed” it in such a way as to simulate millions of years of survival along with the strange loops Douglas R. Hofstadter speaks of in his book I Am a Strange Loop.

@rbtdanforth: As you probably know, ChatGPT is fundamentally a “machine learning” program based on a neural network. It therefore harbors no true “intelligence”. Back in the 1980s I worked on natural language processing systems based on neural networks, funded by DARPA. This was after “back propagation” for neural network training was discovered and computers became just powerful enough for neural networks to be practical.

Today’s popular “AI” systems are fundamentally unchanged from 40 years ago. First you “train” the neural network, investing as much computer time and fancy hardware as you can afford, using whatever “training set” you have provided. After that, your newly birthed “AI” will quickly recognize “patterns” based on your training set. (“Garbage-in, garbage-out” applies here!) ![]()

Moore’s Law has “turned” many times since the 1980s such that what can be “learned” today makes 'bots such as ChatCPT seem truly “omnipotent”. But Oracles they are not! So, whenever you see “artificial intelligence” in this context, think rote “machine learning”—devoid of true “understanding”.

That was my point. If it is not “always on” and each bit “aware” of all the other bits and able to contribute an infinite variety of responses, then it cannot be “Hard AI”. Expert systems create a database of relationships and can do some basic combining. It can pay attention to more clues but what comes out has to have gone in, garbage or not.

This is an example of oversimplfication, I can disprove that in ChatGPT right now:

invent a new word that sounds like english with a complete set of three definitions

Sure! Here is a new word I came up with: “flumplicate.”

- To make a mess or disorder out of something that was previously organized. Example: “I accidentally flumplicated my entire closet while trying to find my favorite shirt.”

- To express surprise or confusion through a combination of a facial expression and a noise. Example: “When I saw the surprise party, I let out a flumplicated gasp.”

- To act or move clumsily or awkwardly. Example: “I flumplicated my way through the dance routine, tripping over my own feet.”

Clearly this never “went in”, so your rule of thumb only applies to short thumbs.

Again, not saying it’s sentient as we understand sentience but it gets really complicated to try to define what it actually is and isn’t. Be careful with simple explanations, it leads to complacency and conservatism.

OK do it again but this time make the word have one definition that means a general unease with the implications of AI systems

Sure! Here is a new word I came up with: “artifire.”

- A general unease or anxiety about the potential consequences and implications of artificial intelligence and its increasing presence in society. Example: “As AI continues to advance, many people are feeling a growing sense of artifire about what the future may hold.”

- The act of questioning or challenging the ethics and motivations behind the development and use of artificial intelligence. Example: “Some experts are calling for greater artifire in the tech industry, arguing that the potential risks of AI should be carefully considered before moving forward with new projects.”

- A feeling of discomfort or unease when interacting with artificial intelligence or technology that simulates human behavior. Example: “Even though I know it’s just a chatbot, I can’t shake the feeling of artifire when I talk to it. It’s like it’s watching me or something.”

Of greater interest would be the likelihood of puns. If you asked for a baby monitor would it show electronics or lizards? If someone said they were going to get drunk would this ever be the image…

Absolutely. The learning curve for an expert system is best if the expertise is narrow, no matter how fast the data acquisition is linear while the need is logarithmic.

You clearly understand what “machine learning” (ML) is not. I was not suggesting otherwise, but hoped to add to the discussion here. ![]()

The term “artificial intelligence” makes everyone who works with ML cringe. IMHO soon tech such as ChatGPT and DALL·E will be integrated into everything that we all use routinely (e.g., think Microsoft Office with a spell- and grammar-checker on steroids).

This is already beginning to affect education. Some claim that the student “essay” already is dead. ChatGPT is a free on-line version of Cliffs Notes that “knows everything”!

Reminds me of the drama regarding letting students take exams with HP-35 calculators instead of just slide rules.

ChatGPT: Create a haiku about a Glowforge laser printer.

Laser beam slicing through

Thin sheets of wood and acrylic

Glowforge, a marvel

DALL-E: Photo of Glowforge laser cutter user worried about ChatGPT stealing their job.

Theory of mind is a whole field of study. While I wouldn’t consider myself “current” on all of that, I am pretty sure we are still not to the point of having a conclusive model of how humans think.

It’s important to be aware that academics working in that area sometimes assert things with an inappropriate level of confidence. George Lakoff’s metaphor stuff, for instance, is pretty speculative.

AI is everywhere. I might be a little off topic but maybe this will help some people with their AI explorations. I hadn’t heard of a bunch of the sites below.

Here is a list of AI resources for web designers by Vitaly Friedman

– For AI Portraits, use Lexica Aperture (https://lnkd.in/eg8She9W),

– For AI headlines and CTAs, use ChatGPT (https://chat.openai.com),

– For AI textures, icons and visual assets, use DALL-E (DALL·E 2),

– For AI logos, use LogoAI (https://www.logoai.com/),

– For AI font picking, use ChatGPT,

– For AI image retouching, use Background Remover (AI Background Remover – Remove Background From Image) and Remove Background (https://www.remove.bg/),

– For AI color palette generator, use Khroma (https://www.khroma.co),

– For AI image upscaling, use Let’s Enhance (https://letsenhance.io/),

– For AI doodles generator, use Autodraw (AutoDraw by Google Creative Lab - Experiments with Google),

– For AI eye-tracking/heatmap testing, use Visualeyes (https://lnkd.in/eZvXJXzy),

– For AI avatar generator, use StarryAI (starrytars | AI Avatar Generator),

– For AI-generated model photos, use Generated.photos (https://generated.photos/),

– For AI image editing, use Lensa (Lensa - Prisma Labs),

– For AI mock-ups generator, use uizard (https://uizard.io/).

He added a few more:

– AI color palette generator (https://www.khroma.co),

– AI image upscaling (https://letsenhance.io/)

– AI doodles generator (AutoDraw by Google Creative Lab - Experiments with Google)

– AI eye-tracking/heatmap testing (https://www.visualeyes.design/)

– AI avatar generator (starrytars | AI Avatar Generator)

– AI-generated model photos (https://generated.photos/)

– AI image editing (Lensa - Prisma Labs)

– AI mock-ups generator (https://uizard.io/)

He wasn’t the first to come up with it, but kind of like continental drift theory it makes a great many odd observations make sense. But then I remember when that was considered speculative also. Even Aristotle almost noticed the metaphor concept.

You got a snort out of me: “If the Borg were to assimilate you, they’d probably become very confused about spelling.”

Thanks so much for the links. I’ve been wanting to do a little exploring. This looks like a great place to start.