Thoughts have been swirling around in my head ever since I saw the recent Tested video. We all saw how the actual cutting deviated from the drawing on the material being cut. I thought to myself, what is to become of the pass-through feature if the Forge can’t use the lid camera to accurately align a new cutting session with the edge of what was previously cut?

@sam had a very insightful comment:

So it seems like there are three separable issues that must be tackled to get pass-through cutting to work.

–correcting for the distortion of the wide angle lens

–accurately measuring the thickness of the material (and adjusting the distortion correction accordingly!)

–accounting for material warp

I was curious to do some computations to see just how much error in cutting there would be if the first two issues were solved perfectly, but the material is warped, using realistic dimensions and expectations for the warped material.

Getting someone to provide actual warping data proved to be tedious ![]() , so I decided to just treat it as a variable.

, so I decided to just treat it as a variable.

Suppose the material has a saddle-shaped warp to it, which is much more likely to happen, I’m guessing, and will give conservative results compared to bowl-shaped warping.

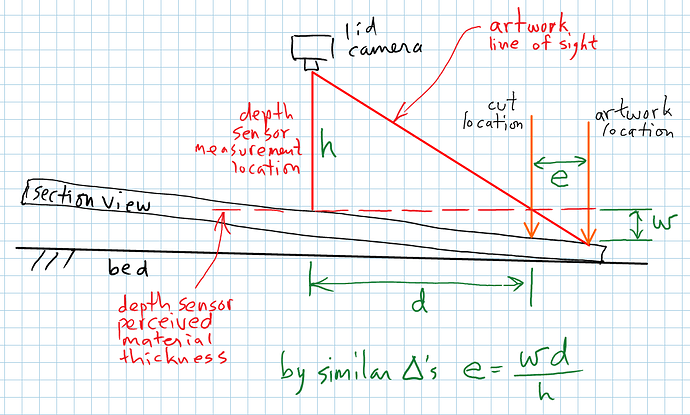

Now, imagine a section view of the material, with the cross-section line passing through the point where the depth sensor is making its measurement and one point where the material is resting on the bed. For simplicity sake, let’s assume this section forms a flat cross section. I know, very unrealistic, but suitable for this very rough approximation.

Here’s the situation:

Let’s put in some typical numbers. The distance to the lens, h, is roughly 5" (thanks @marmak3261 !). A typical value for “d” might be 10".

That means the error in cutting, e, will be about twice the amount of warp, w, as measured in the drawing. That’s a lot.

My take-away from all this is that unless the Forge uses the camera in the head to characterize the shape of the material surface, and uses that information as part of the lid camera distortion correction algorithm, I see little hope of pass-through working satisfactorily on warped materials.

Furthermore, this problem also applies to the accuracy of the simple act of cutting artwork captured by the lid camera, and aligning it with existing features on the material. This, in itself, may be more important than the passthrough issue!

Don’t get me wrong. I’m still very excited to receive my Forge, even if it has this limitation. I know there are brilliant people working on this problem at Glowforge, and for all I know, already have a solution! May the Forge be with them! And also with you, soon.